Paper award for training computer vision systems more accurately

PhD student Jean Young Song offers an improved solution to the problem of image segmentation.

Enlarge

Enlarge

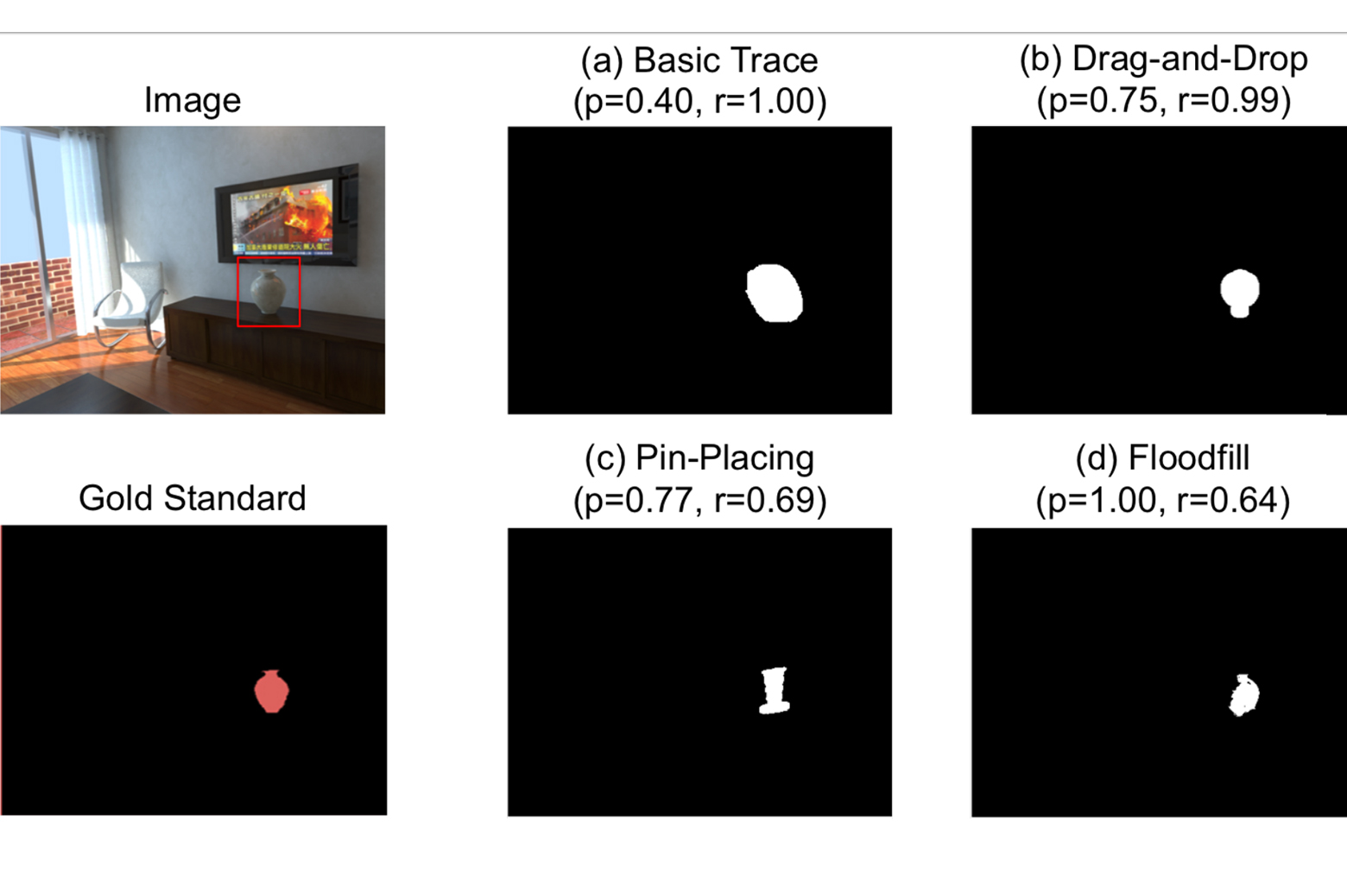

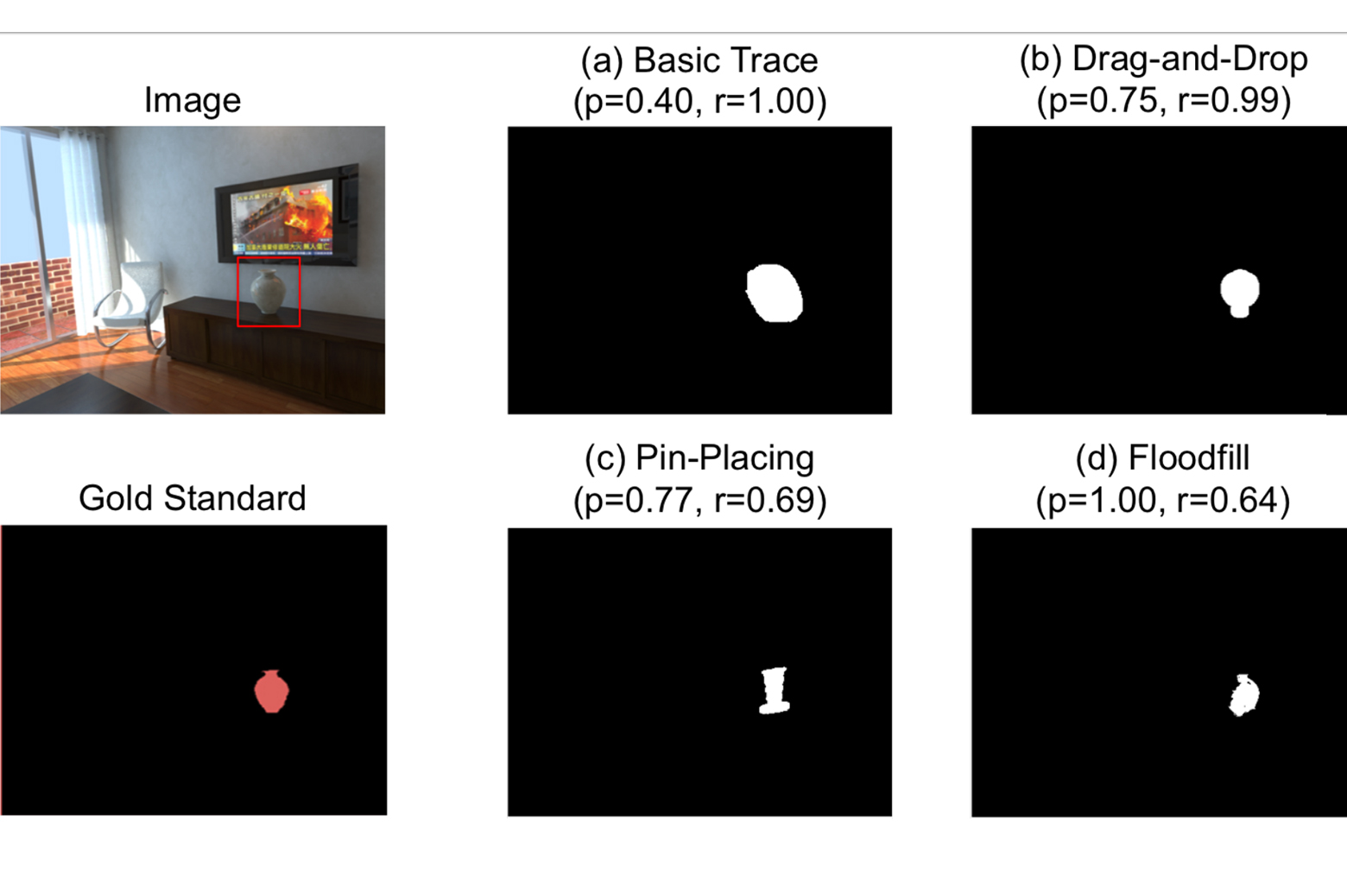

PhD student Jean Young Song earned a Best Student Paper Honorable Mention at the Intelligent User Interfaces (IUI 2018) conference in Tokyo. Her paper, “Two Tools are Better Than One: Tool Diversity as a Means of Improving Aggregate Crowd Performance,” offers an improved solution to the problem of image segmentation in computer vision by introducing a new way to think about leveraging human effort.

Image segmentation is how we visually tell where one object ends and another begins, but it has proven to be a challenging skill for computer vision (CV) systems. This skill will be necessary to enable autonomous cars to identify pedestrians or in-home robots help people with motor impairments, among many other applications.

One popular means of training CV systems to identify objects is to crowdsource the generation of data sets, using human understanding to produce large, manually-demarcated collections of images. Designing the systems that collect these sets is challenging, though, since the data is often error-prone. Individual tool designs can bias how and where people make mistakes, resulting in shared errors that remain even after aggregating the many responses together.

Song set out to build a crowdsourcing workflow that reduces these error biases using a technique called “multi-tool decomposition.” Standard aggregation methods in crowdsourcing try to design and use the single best tool available to reach high individual accuracy. Song’s study shows that using multiple effective tools can diversify the error patterns in worker responses and help systems achieve higher combined accuracy.

Enlarge

Enlarge

The study used four common classes of annotation tools, demonstrating that each causes worker responses to suffer from similar errors when used for the same images. By combining these different tools and aggregating their results, these errors and biases can be offset, improving the accuracy of training datasets overall.

Song is advised by Prof. Walter S. Lasecki.

MENU

MENU